Cannot Find A Valid Baseurl For Repo Updates Fedora 6 Commands

How To Install Elasticsearch, Logstash, and Kibana (ELK Stack) on Cent. OS/RHEL 7. If you are a person who is, or has been in the past, in charge of inspecting and analyzing system logs in Linux, you know what a nightmare that task can become if multiple services are being monitored simultaneously. In days past, that task had to be done mostly manually, with each log type being handled separately. Fortunately, the combination of Elasticsearch, Logstash, and Kibana on the server side, along with Filebeat on the client side, makes that once difficult task look like a walk in the park today.

The first three components form what is called an ELK stack, whose main purpose is to collect logs from multiple servers at the same time (also known as centralized logging). Suggested Read: 4 Good Open Source Log Monitoring and Management Tools for Linux. A built- in java- based web interface allows you to inspect logs quickly at a glance for easier comparison and troubleshooting. These client logs are sent to a central server by Filebeat, which can be described as a log shipping agent.

Let’s see how all of these pieces fit together. Our test environment will consist of the following machines: Central Server: Cent. OS 7 (IP address: 1. GB of RAM. 1 GB of RAM. GB of RAM. Less RAM on clients will not make much difference, if any, at all. Installing ELK Stack on the Server. Let’s begin by installing the ELK stack on the server, along with a brief explanation on what each component does: Elasticsearch stores the logs that are sent by the clients.

- Both registration and subscriptions are managed on the local system through UI and CLI tools called Red Hat Subscription Manager. The Subscription Manager tracks and.

- Nslookup is a command-line administrative tool for testing and troubleshooting DNS servers (Domain Name Server). It is used to query specific DNS resource.

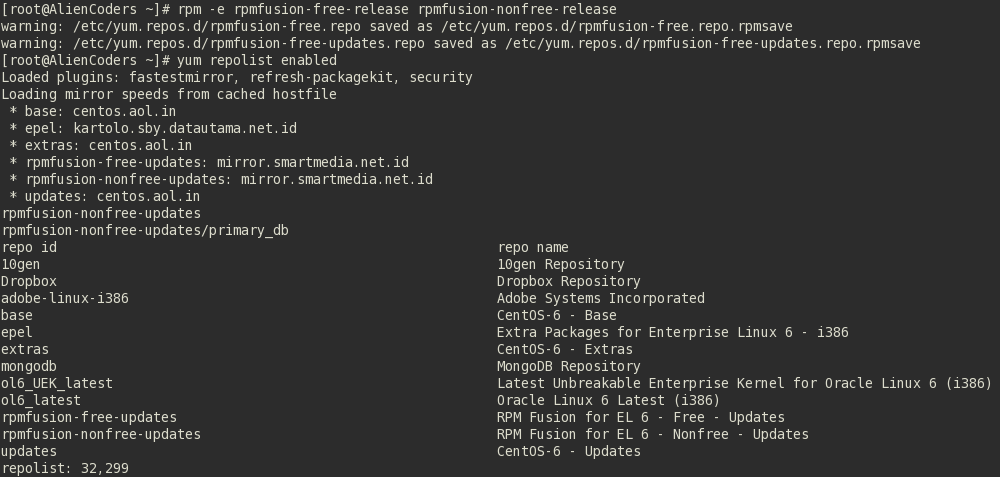

To install the latest versions of Elasticsearch, Logstash, and Kibana, we will have to create repositories for yum manually as follows: Enable Elasticsearch Repository.

Logstash processes those logs. Kibana provides the web interface that will help us to inspect and analyze the logs. Install the following packages on the central server. First off, we will install Java JDK version 8 (update 1. ELK components. You may want to check first in the Java downloads page here to see if there is a newer update available.# yum update. Import the Elasticsearch public GPG key to the rpm package manager: # rpm - -import http: //packages.

GPG- KEY- elasticsearch. Insert the following lines to the repository configuration file elasticsearch.

Install the Elasticsearch package.# yum install elasticsearch. When the installation is complete, you will be prompted to start and enable elasticsearch: Install Elasticsearch in Linux. Start and enable the service.# systemctl daemon- reload. Allow traffic through TCP port 9. Check if Elasticsearch responds to simple requests over HTTP: # curl - X GET http: //localhost: 9.

The output of the above command should be similar to: Verify Elasticsearch Installation. Make sure you complete the above steps and then proceed with Logstash. Since both Logstash and Kibana share the Elasticsearch GPG key, there is no need to re- import it before installing the packages. Suggested Read: Manage System Logs (Configure, Rotate and Import Into Database) in Cent.

OS 7. Enable Logstash Repository. Insert the following lines to the repository configuration file logstash.

Install the Logstash package: # yum install logstash. Add a SSL certificate based on the IP address of the ELK server at the the following line below the . Download Ebook For Ethical Hacking For Beginners on this page.

Generate a self- signed certificate valid for 3. Configure Logstash input, output, and filter files: Input: Create /etc/logstash/conf. This is necessary for Logstash to “learn” how to process beats coming from clients. Make sure the path to the certificate and key match the right paths as outlined in the previous step: /etc/logstash/conf.

We will log syslog messages for simplicity: /etc/logstash/conf. Verify the Logstash configuration files.# service logstash configtest. Verify Logstash Configuration. Start and enable logstash: # systemctl daemon- reload. Configure the firewall to allow Logstash to get the logs from the clients (TCP port 5.

Enable Kibana Repository. Insert the following lines to the repository configuration file kibana. Install the Kibana package: # yum install kibana.

Start and enable Kibana.# systemctl daemon- reload. Make sure you can access access Kibana’s web interface from another computer (allow traffic on TCP port 5. Launch Kibana (http: //1. Access Kibana Web Interface. We will return here after we have installed and configured Filebeat on the clients. Suggested Read: Monitor Server Logs in Real- Time with “Log. Tool in Linux. Install Filebeat on the Client Servers.

We will show you how to do this for Client #1 (repeat for Client #2 afterwards, changing paths if applicable to your distribution). Copy the SSL certificate from the server to the clients: # scp /etc/pki/tls/certs/logstash- forwarder.

Import the Elasticsearch public GPG key to the rpm package manager: # rpm - -import http: //packages. GPG- KEY- elasticsearch. Create a repository for Filebeat (/etc/yum.

Cent. OS based distributions: /etc/yum. Configure the source to install Filebeat on Debian and its derivatives: # aptitude install apt- transport- https. Install the Filebeat package: # yum install filebeat .

Start and enable Filebeat: # systemctl start filebeat. Configure Filebeat. A word of caution here. Filebeat configuration is stored in a YAML file, which requires strict indentation. Be careful with this as you edit /etc/filebeat/filebeat. Under paths, indicate which log files should be “shipped” to the ELK server. Under prospectors: input.

The first thing that we will have to do in Kibana is configuring an index pattern and set it as default. You can describe an index as a full database in a relational database context. We will go with filebeat- * (or you can use a more precise search criteria as explained in the official documentation). Enter filebeat- * in the Index name or pattern field and then click Create: Testing Kibana. Please note that you will be allowed to enter a more fine- grained search criteria later.

Next, click the star inside the green rectangle to configure it as the default index pattern: Configure Default Kibana Index Pattern. Finally, in the Discover menu you will find several fields to add to the log visualization report. Just hover over them and click Add: Add Log Visualization Report.

The results will be shown in the central area of the screen as shown above. Feel free to play around (add and remove fields from the log report) to become familiar with Kibana. By default, Kibana will display the records that were processed during the last 1. Kibana Log Reports. Summary. In this article we have explained how to set up an ELK stack to collect the system logs sent by two clients, a Cent. OS 7 and a Debian 8 machines.

Now you can refer to the official Elasticsearch documentation and find more details on how to use this setup to inspect and analyze your logs more efficiently. If you have any questions, don’t hesitate to ask. We look forward to hearing from you.